Proof of Concept (POC) to analyze the huge application log files using HADOOP Cluster on IBM Cloud Platform

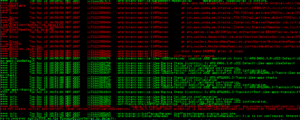

Analyzing the application log files, those are generated on production environment is very challenging. Data in the log file is in unstructured format and hence they can’t be stored in RDBMS without conversion to structured format (row, column) to leverage the query functionality. Hence finding a specific information from large log file probably of size hundreds of terabytes is nearly impossible to troubleshoot if application behaves abruptly for very short duration.

Analyzing the application log files, those are generated on production environment is very challenging. Data in the log file is in unstructured format and hence they can’t be stored in RDBMS without conversion to structured format (row, column) to leverage the query functionality. Hence finding a specific information from large log file probably of size hundreds of terabytes is nearly impossible to troubleshoot if application behaves abruptly for very short duration.

As part of our case study, we found that sometimes, asynchronous communication was not getting established to a third party vendor for order fulfillment from an E-Commerce application running on Oracle Web Commerce platform (ATG). JMS messaging protocol was responsible to delivered the order submission message from ATG third party vendor and vice versa, but it was failing to do that sometimes. Using Hadoop cluster with customized Map-Reduce programming model, we extracted the exact recorded warnings and errors from log files produced from out of box ATG component. After performing the intricate analysis within the framework component, based on the analyzed reports produced by Hadoop framework, we concluded that the issue was lying within the ATG framework itself. The same was communicated to the software vendor and subsequently received the patch from them.