Real-time distributed data streaming

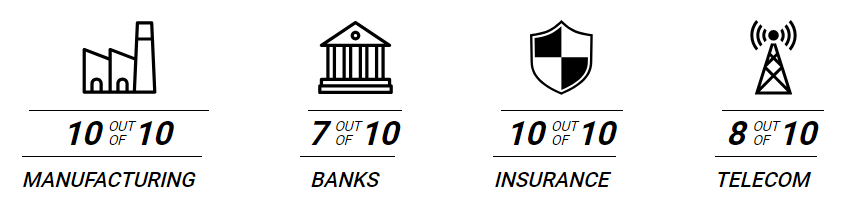

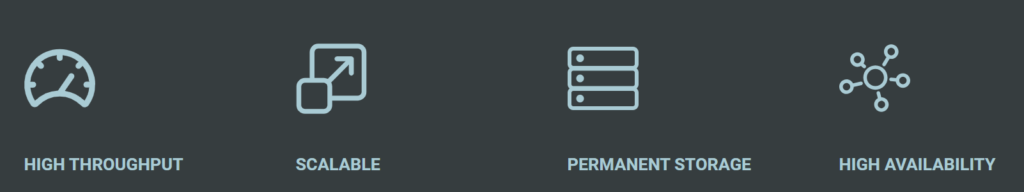

Apache Kafka® is an open source distributed event streaming platform that used for building high-performance real-time data pipelines, streaming analytics, data integration, and mission-critical applications and streaming apps. It is horizontally scalable, fault-tolerant, ultra fast, and more than 80% of all Fortune 100 companies trust, and use Kafka.

Originally written in Scala and Java, Apache Kafka first started at LinkedIn. It provides a publisher-subscriber mechanism for processing and storing data streams in a fault-tolerant way. It uses streaming social data, Geo-spatial data or sensor data from various devices. Kafka acts like a plugin for Spark, Hadoop, Storm, HBase, Flink and many others for big data analytics.

Using Kafka for real-time streaming

To build real-time streaming applications that react to streams to do real-time data analytics.

To transform, react, aggregate, and join real-time data flows.

To perform complex event processing.

To work with other platforms e.g. Flink, Druid etc.. and provide solution for stream processing, messaging, analytics, website activity tracking, log aggregation and operational metrics.

Build real-world big data applications with Kafka

Design & build new systems with Kafka

Right from designing, building new systems to fine-tuning existing systems, Irisidea offers a complete range of Apache Kafka & Confluent Kafka application consulting & solutions.

We help teams design and build platforms that successfully and efficiently meet business and technical requirements.

Extensive Kafka implementation experience

With extensive development, deployments and optimization experience with Kafka, we ensure high quality implementation on-prem and various cloud platform such as AWS, Google Cloud and Azure

We abide with the best practices when it comes to performance and storage goals, cluster resilience across availability zones, disaster recovery, integration between cloud and on-prem clusters, security requirements etc.

Our Kafka consulting offerings

Kafka application consulting

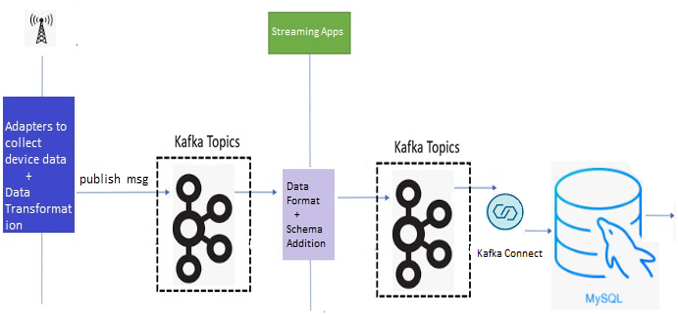

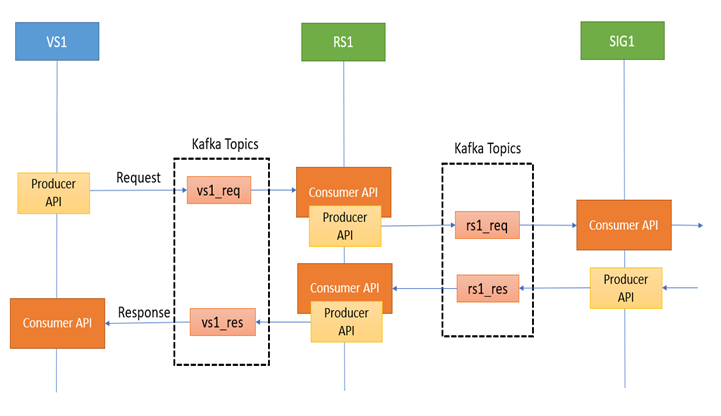

- Application Architecture/Solution Design and development

- Detailed System design and implementation for scalability and fault tolerance

- Integration/ingestion design with other systems and implementation

- Connector development / implementation

- Proof of Concept development

- Real-time Streaming application design using Kafka Clients and Streams API

Kafka infrastructure consulting

- Cluster sizing, capacity planning, infrastructure design, and implementation

- Bench-marking, throughput, latency and reliability tuning

- Multi-data-center design and implementation

- DR/fail-over, active/passive implementation and testing

- Upgrade assessment, planning, and implementation

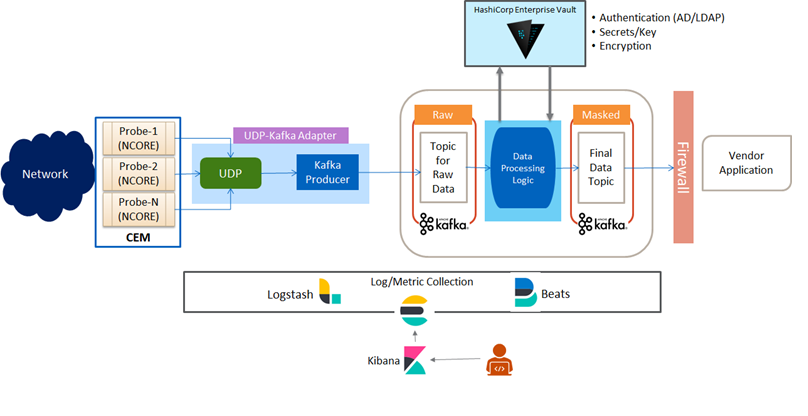

- Design, configure and implement metric monitoring and alerts

- Log collection and integration with ELK platform

Kafka security implementation

- Kafka security design and implementation (Authentication and Authorization)

- Wire level encryption implementation

- Data at rest encryption implementation

- Design and implementation of Audit trail

- Security in Cloud implementation

- Integration with Vault and Key Management Platform

- Encryption Key Management Integration

How do we solve data streaming & processing challenges?

Kafka architecture development

Our Kafka experts work with your team to review your existing solution, and share best practices, identify areas for improvement, how to avoid errors, provide hardware requirements, and more.

Health check & preventative maintenance

Our Kafka experts work alongside your team to review your current Kafka solution assessing it for reliability, scalability, latency, throughput, monitoring, log management, hardware, preventative maintenance, and more.

Supporting tools & technologies

Our consultants can assist you not only with your Kafka solution but also with other critical components of your data architecture, providing a uniquely comprehensive approach.

Kafka upgrades

Have one of our Kafka experts review your production upgrade plans and help you understand the differences between versions while maintaining your SLAs.

Engagement model

We’ve assisted companies to architect and optimize their Kafka solution. Our Kafka experts can help you save time and resources to avoid errors, apply best practices, and deploy high-performance streaming platforms that scale.

Complete solution from scratch

Short-term ad-hoc work

Post deployment support

Success Stories

Real-time analytics on Real-time Streaming?

Integrating Kafka with Apache Druid to analyse data in motion

Apache Druid is a real-time analytics database designed for fast slice-and-dice analytics (“OLAP” queries) on large data sets. Most often, Druid powers use cases where real-time ingestion, fast query performance, and high uptime are important.

Druid is commonly used as the database backend for GUIs of analytical applications, or for highly-concurrent APIs that need fast aggregations. Druid works best with event-oriented data.

Common application areas of Druid

- Clickstream analytics including web and mobile analytics

- Network telemetry analytics including network performance monitoring

- Server metrics storage

- Supply chain analytics including manufacturing metrics

- Application performance metrics

- Digital marketing/advertising analytics

- Business intelligence/OLAP