- Products & Platforms

- RouteEye – Real-time Vehicle Tracking System

- Kalrav AI Agent – A Powerful, Customizable AI Agent

- LeafAid AI – Your Smart Crop & Plant Health Companion

- Staff IRIS – GPS-based Real-time Monitoring of Field Staffs

- Koolanch – Self-service Data Integration Platform

- SkillAnything – eLearning Platform for Organizations

- KafkaView – Real-time Kafka Cluster Monitoring

- Cloud & Data Engineering

- IoT

- AI & ML

- Solutions

- Company

+91 (988) 002 7443 | [email protected]

- Products & Platforms

- RouteEye – Real-time Vehicle Tracking System

- Kalrav AI Agent – A Powerful, Customizable AI Agent

- LeafAid AI – Your Smart Crop & Plant Health Companion

- Staff IRIS – GPS-based Real-time Monitoring of Field Staffs

- Koolanch – Self-service Data Integration Platform

- SkillAnything – eLearning Platform for Organizations

- KafkaView – Real-time Kafka Cluster Monitoring

- Cloud & Data Engineering

- IoT

- AI & ML

- Solutions

- Company

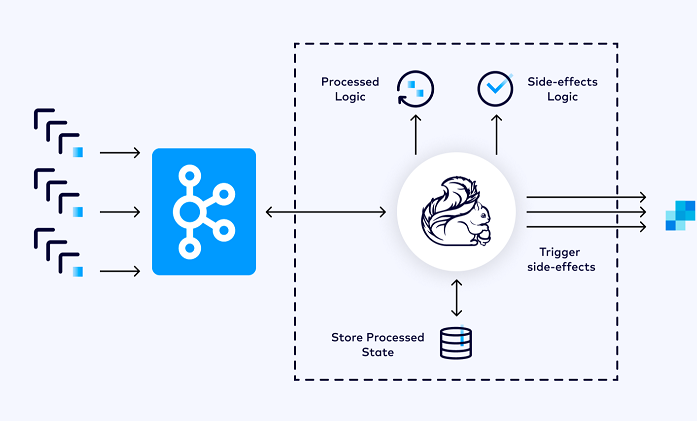

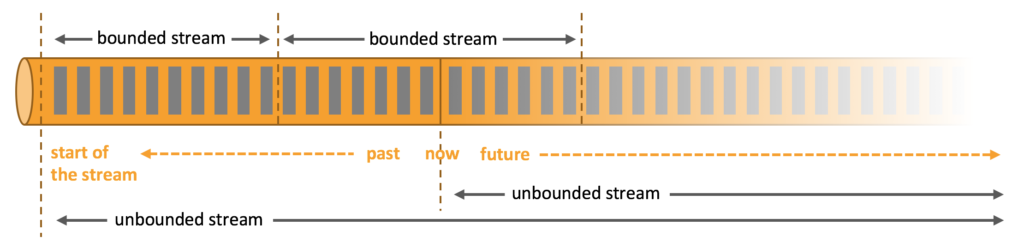

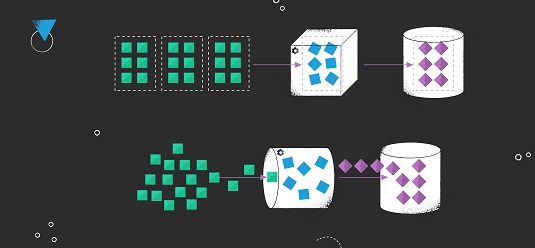

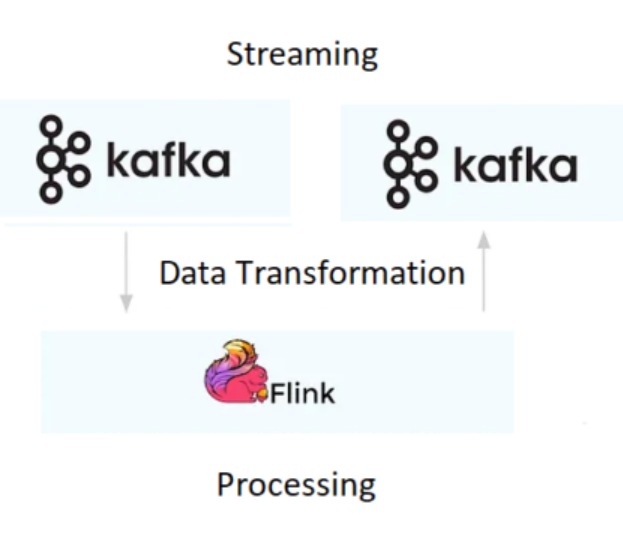

Process Streaming Data with Apache Flink

- Home

- Process Streaming Data with Apache Flink

© Copyright 2026. All Rights Reserved.