+91 (988) 002 7443 | [email protected]

- Cloud & Data Engineering

- IoT

- AI & ML

- Solutions

- Products & Platforms

- Company

Process Streaming Data with Apache Flink

- Home

- Process Streaming Data with Apache Flink

© Copyright 2024. All Rights Reserved.

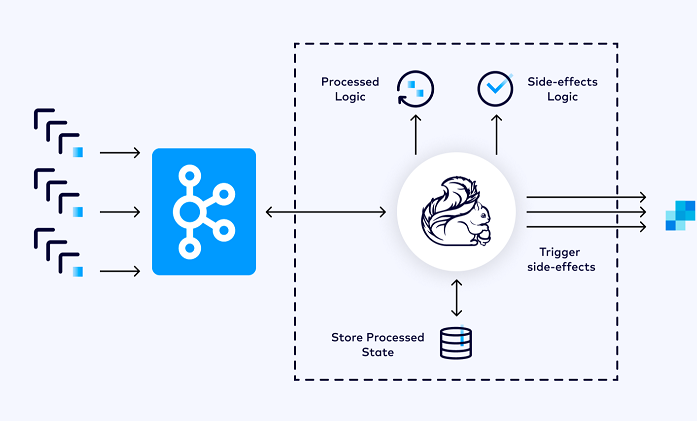

An event-driven application is a stateful application that ingest events from one or more event streams and reacts to incoming events by triggering computations, state updates, or external actions.

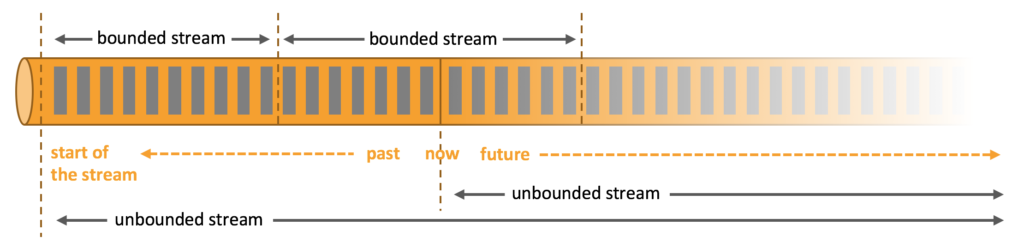

Analytical jobs extract information and insight from raw data. Apache Flink supports traditional batch queries on bounded data sets and real-time, continuous queries from unbounded, live data streams.

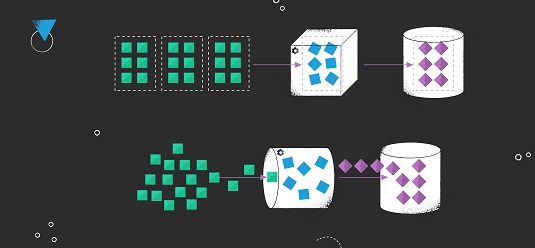

Extract-transform-load (ETL) is a common approach to convert and move data between storage systems.

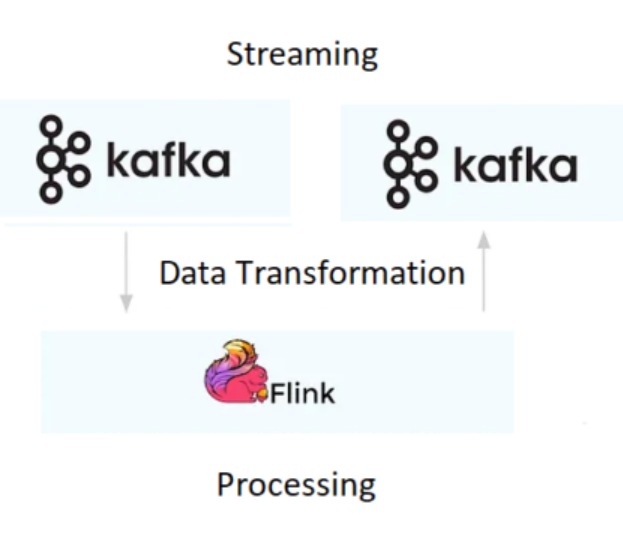

Unlike alternative systems such as ActiveMQ, RabbitMQ, etc., Kafka offers the capability to durably store data streams indefinitely, enabling consumers to read streams in parallel and replay them as necessary. This aligns with Flink’s distributed processing model and fulfills a crucial requirement for Flink’s fault tolerance mechanism.

Kafka can be used by Flink applications as a source as well as a sink by utilizing tools and services available in the Kafka ecosystem. Flink offers native support for commonly used formats like Avro, JSON, and Protobuf, similar to Kafka’s support for these formats.

Events are added to the Flink table in a similar manner as they are appended to the Kafka topic. A topic in a Kafka cluster is mapped to a table in Flink. In Flink, each table is equal to a stream of events that describe the modifications being made to that particular table. The table is automatically updated when a query refers to it, and its results are either materialized or emitted.