+91 (988) 002 7443 | [email protected]

- Cloud & Data Engineering

- IoT

- AI & ML

- Solutions

- Products & Platforms

- Company

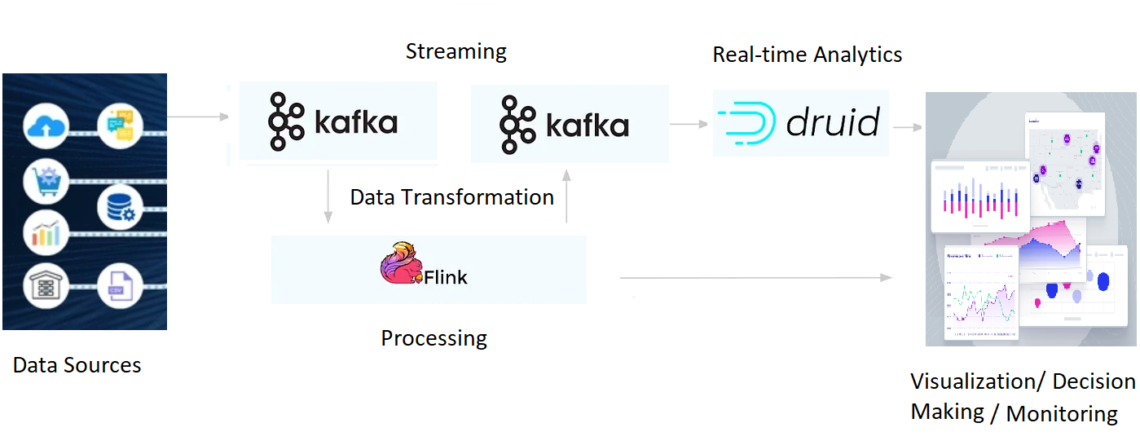

Driving Business Growth with Apache Kafka – Flink – Druid

- Home

- Driving Business Growth with Apache Kafka – Flink – Druid

© Copyright 2024. All Rights Reserved.