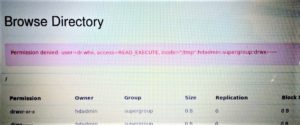

Error while batch processing of rest data persisted in Basic Hadoop based (HDFS) Data Lake “Permission denied: user=dr.who, access=READ_EXECUTE, inode=”/tmp”:hdadmin:supergroup:drwx……..”

Typically, persisting unstructured data and subsequent batch processing can be very costly and is not advisable for small organizations & startups, as cost is prime factor for them.

A Hadoop based Data Lake using Map-Reduce, fits perfectly in this scenario which is not only cost effective but also scalable and easy to extend further. Though it may sound a great option to have, we might face issues while setting up the same and one of common issues is, error “Permission denied: user=dr.who, access=READ_EXECUTE, inode=”/tmp”:hdadmin:supergroup:drwx……..“.

A Hadoop based Data Lake using Map-Reduce, fits perfectly in this scenario which is not only cost effective but also scalable and easy to extend further. Though it may sound a great option to have, we might face issues while setting up the same and one of common issues is, error “Permission denied: user=dr.who, access=READ_EXECUTE, inode=”/tmp”:hdadmin:supergroup:drwx……..“.

It occures, when:

- We submit the map-reduce job to multi-node cluster from command prompt without creating a new HDFS user, and

- Missed to set the parameter of Hadoop temp directory location in the core-site.xml file.

- Missed to change the access privileges on the HDFS directory /user, before starting the cluster, which is mandatory.

Here are two tips listed to avoid such exceptions and allow MapReduce jobs to execute.

- Create a new HDFS user

Create a new HDFS user by creating a directory under the /user directory and this directory will serve as the HDFS “home” directory for that user. Prior to this, required permissions should also be set on the Hadoop temp directory.

$ hdfs dfs -mkdir /user/<new hdfs user directory name>

If we won’t set this parameter, Hadoop, by default, will create directories dfs and nm-local-dir.

Since, we did not set the parameter in core-site.xml and login to the system as hdadmin, directories dfs and nm-local-dir got created.

We should make sure to set the permissions on the Hadoop temp directory, as we specified already in the core-site.xml file.

$ hdfs –dfs –chmod –R 777 /tmp

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop-$(user.name)</value>

</property>

- Change the value of property name “dfs.permissions.enabled” from “true” to “false” in hdfs-site.xml

This option should only be considered for the cluster that belongs to development environment or POC exercise, and is not advisable in the production environment to retain the security, authorization etc. intact on the ingested data.

<property>

<name> dfs.permissions.enabled </name>

<value>false</value>

</property>

Once we set false, permission checking would be turned off, but all other behavior will be unchanged. Also, switching from one parameter value to the other won’t change the mode, owner or group of files or directories.

By Gautam Goswami