Orchestrating Multi-Brokers Kafka Cluster through CLI Commands

This short article aims to highlight the list of commands to manage a running multi-broker multi-topic Kafka cluster utilizing built-in scripts. These commands will be helpful/beneficial when the cluster is not integrated or hooked up with any third party administrative tool having GUI facilities to administer or control on the fly. Of course, most of them are not free to use. Can refer here to set up a multi-broker Kafka cluster.

By executing the built-in scripts available inside the bin directory of the Kafka installation with required parameters, we can extract information, manage topics, partitions, replication factor, etc of a running cluster. Besides, authorization management too. Here I have listed down few commands mainly relates to topic management and some basic information from the running cluster.

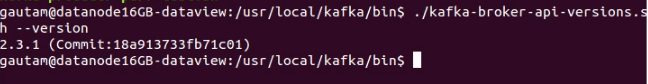

- Get the version of the installed Kafka on the cluster using kafka-broker-api-versions.sh

$Kafka_Home/bin$ ./kafka-broker-api-versions.sh –version

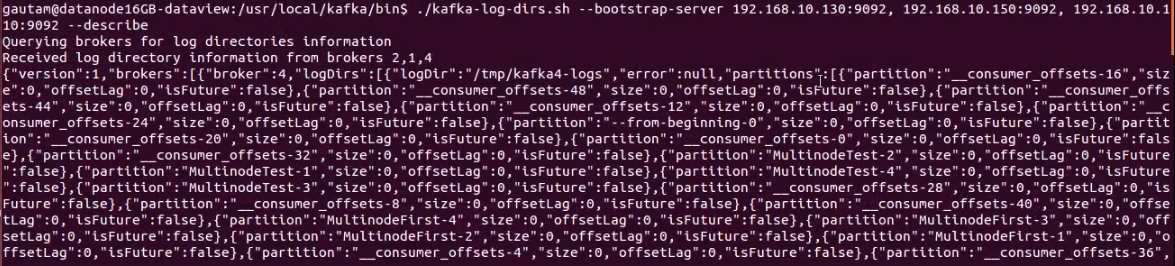

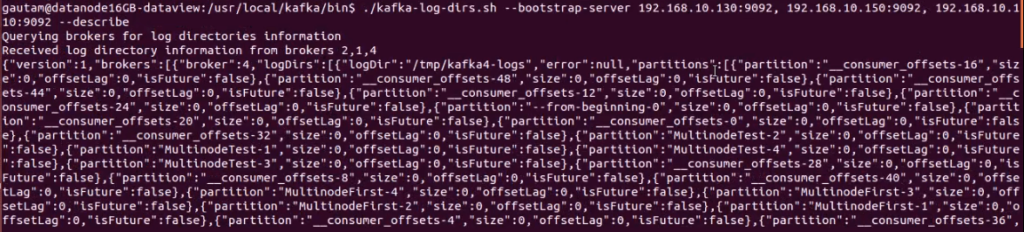

- To retrieve log directories information from the brokers using kafka-log-dirs.sh. Here we need to append the list of zookeepers or bootstrap server’s IP addresses with the port where each broker is running in the cluster. This information would be helpful to get all the log files of each broker for further analysis if any issue/exception occurring in the running cluster on production mode.

$Kafka_Home/bin$ ./kafka-log-dirs.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –describe–bootstrap-server can be replaced with –zookeeper and the list of IP address or alias name of each zookeeper server with port.

As a example

$Kafka_Home/bin$ ./kafka-log-dirs.sh –zookeeper 192.168.10.130:5181, 192.168.10.150:5181, 192.168.10.110:5181 –describe

- To get the list of available Kafka topic in the entire cluster using kafka-topics.sh. As an argument, we have to provide the list of bootstrap server’s IP addresses with the port of each broker.

$Kafka_Home/bin$ ./kafka-topics.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –list

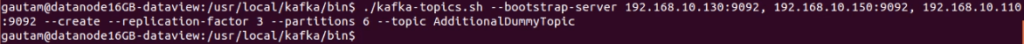

- Create a Kafka topic in the cluster using kafka-topics.sh

$Kafka_Home/bin$ ./kafka-topics.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –create –replication-factor <replication factor number> –partitions <Number of partition in the topic> –topic <Topic Name>

Note:- The number of replication factor can’t be higher than the number of broker in the cluster. It can be less or equal to the number of broker in the cluster. If we provide higher number of replication factor than the broker number then InvalidReplicationFactorException will be thrown in the console.

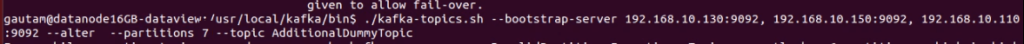

- Alter or modify a Kafka topic already available on brokers in the cluster. Please consider as a warning while modifying the number of partitions in existing Kafka topic that already holding number of messages. Specifically, if partitions are increased, then the ordering of messages, partition logic in that topic will be affected. We can’t decrease the number of partitions specified at the time of creation of a Kafka topic. It is advisable to alter a Kafka topic that does not have any messages.

$Kafka_Home/bin$ ./kafka-topics.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –alter –partitions <Number of partition in the topic to increse> –topic <Topic Name>Replication factor of a Kafka topic can’t be altered/modified using alter command

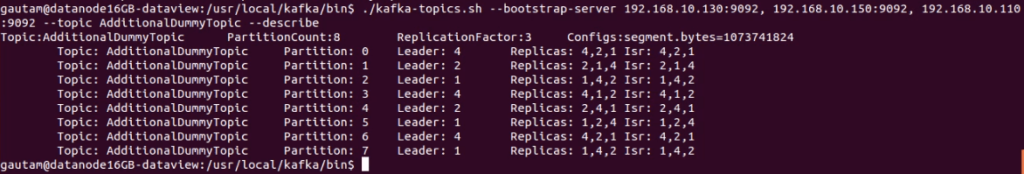

- Description of a Kafka topic. By adding an argument “describe” with kafka-topic.sh, description of a existed Kafka topic can be viewed.

$Kafka_Home/bin$ ./kafka-topics.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –topic <Topic Name> –describe

- Delete a Kafka topic. With a argument delete, we can delete a existed Kafka topic in the cluster.

$Kafka_Home/bin$ ./kafka-topics.sh –bootstrap-server <list of IP Address and port separated by comma(,) > –delete –topic <Topic Name>

On top of above commands using kafka-topics.sh, we can change the retention time of records on a Kafka topic as well as purge. I have skipped producer, consumer, mirror maker etc and security (Authorization of a Kafka topic) using kafka-acls.sh in this write.

Expectation above will profit/help you to deal with an exceptionally fundamental administrative task in the Kafka cluster and appreciated the read as well.

Reference :- https://docs.confluent.io/current/administer.html

Written by

Gautam Goswami