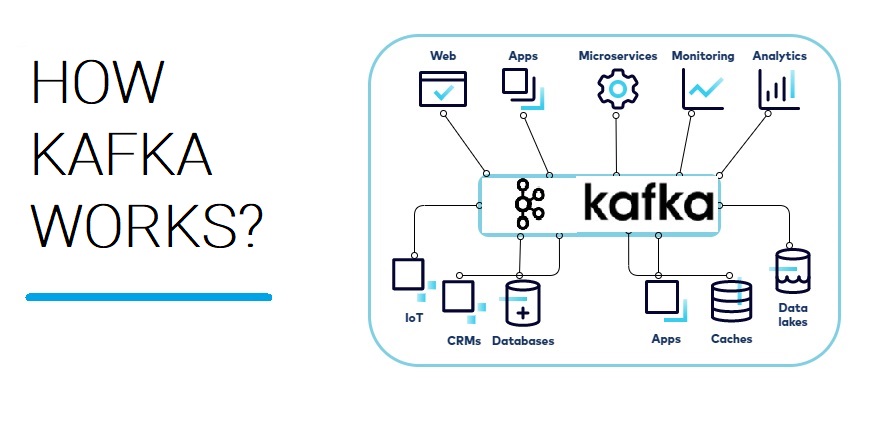

How Kafka Works?

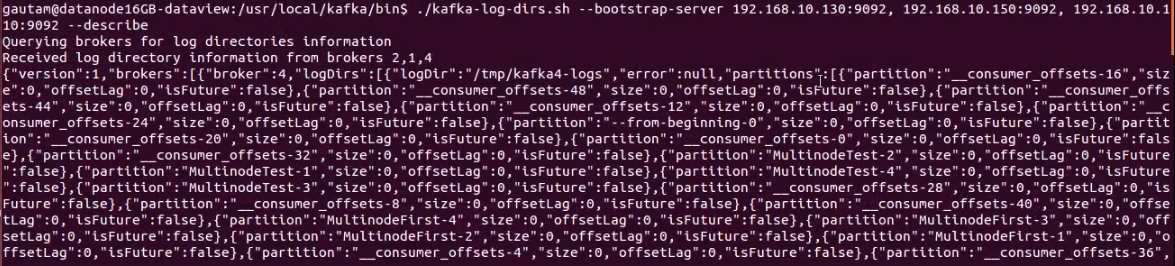

#realtimedata streaming with #kafka is a popular and powerful approach to handle large volumes of data and facilitate communication between different systems and applications. #apachekafka is an open-source distributed event streaming platform that allows you to publish, subscribe, store, and process streams of records. Here's a general overview of how real-time data streaming with Kafka works: Topic and Message Model: Data is organized into topics, which are essentially log-like data streams. Each message within a topic consists of a key, value, and timestamp. Publishers...