Why Lambda Architecture in Big Data Processing

Due to the exponential growth of digitization, the entire globe is creating minimum 2.5 Quintilian 2500000000000 Million) bytes of data every day and that we can denote as Big Data. Data generation is happening from everywhere starting from social media sites, various sensors, satellite, purchase transaction, Mobile, GPS signals and much more. With the advancement of technology, there is no sign of slowing down of data generation, instead it will grow in massive volume. All the major organizations, retailers, different vertical companies and enterprise products have started focusing on leveraging big data technologies to produce actionable insights, business expansion, growth etc.

Lambda Architecture is an excellent design framework for the huge volume of data processing using both streaming as well as batch processing method. The streaming processing method stands for analyzing the data on the fly when it is on motion without persisting on storage area whereas batch processing method is applied when data already in rest, means persisted in storage area like databases, data warehousing systems etc. Lambda Architecture can be effectively utilized to balance latency, throughput, scaling, and fault-tolerance to achieve comprehensive and accurate views from the batch and real-time stream processing simultaneously.

We can divide the entire Big Data processing into two different Data Pipelines. One is when data is in rest that means, the massive volume of data collected from different sources, store or persisted on a distributed manner and then analyse to get an accurate view in order to take the business decision. We can term it as Batch Data-processing Pipeline also.

Another one is for Streaming Data Pipeline where analysis can be done when data is in motion. Here runs the computation on the live data stream. Apache Spark is an excellent framework for it. Spark chop up the live stream of data into small batches, hold those into memory then process and finally release them from it’s memory to data flow again. Due to in-memory computation, latency reduces significantly.

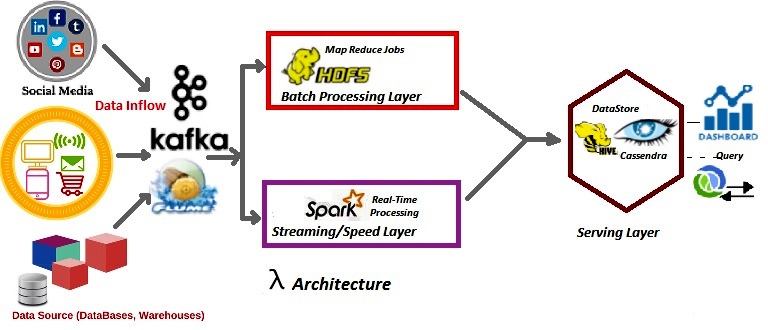

Nathan Marz from Twitter is the first contributor who designed lambda architecture for big data processing. Lambda architecture can be divided into four major layers. As we can see in the architecture diagram, layers start from Data Ingestion to Presentation/View or Serving layer.

– In Data ingestion or consumption layer, we can include Apache Kafka, Flume etc which are responsible for gathering data from various/multiple sources. Based on the requirement to process data either on batches, live streaming or combination of both, bifurcation takes place here like Lambda sign(λ).

– In Batch layer, all the data accumulate at once before running any computation on top of it. Here we can achieve fault-tolerance and replication to prevent any data loss. Hadoop Distributed File System (HDFS) can be considered in this layer.

– Streaming or Speed layer is responsible for processing live streaming data without any persistence of data in the storage area. Here processing of data takes place when it is in motion. This layer activates when data comes in or with specific short time interval and subsequently generates the real-time view which gets pushed to next layer (Servicing Layer)

– Eventually, in this layer called Serving Layer, we get combined results from both streaming layer and batch layer which can be effectively utilized to provide unified desired results. Here always we will get the update from the batch layer and streaming layer either periodically or in real-time.

Lambda Architecture is a pluggable architecture where, to process on demand, we can plug-in and plug-out the various number of data generation sources.