Few intrinsic of Apache Zookeeper and their importance

As a bird’s eye view, Apache Zookeeper has been leveraged to get coordination services for managing distributed applications. Holds responsibility for providing configuration information, naming, synchronization, and group services over large clusters in distributed systems. To consider as an example, Apache Kafka uses Zookeeper for choosing their leader node for the topic partitions. Please click here if you want read on how to setup the multi-node Apache Zookeeper cluster on Ubuntu/Linux

zNodes

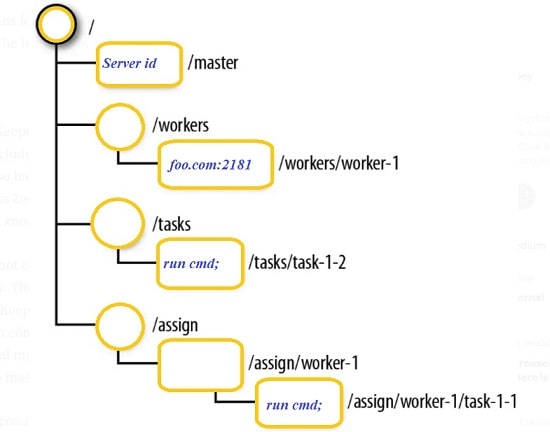

The key concept of the Zookeeper is the znode which can be acted either as files or directories. Znodes can be replicated between servers as they are working in a distributed file system. Znode can be described by a data structure called stats and it consolidates information about zNode context like creation time, number of changes (as version), number of children, length of stored data or zxid (ZooKeeper transaction id) of creation, and last change. For every modification of zNodes, Its version increases. The zNodes are classified into three categories persistence, sequential, and ephemeral. Persistence znode is alive even after the client, which created that particular znode, is disconnected. Also, they survive after ZooKeeper restarted. Ephemeral znodes are active until the client is alive. As soon as the client gets disconnected from the ZooKeeper ensemble, then the ephemeral znodes also get deleted automatically. Sequential znodes can be either persistent or ephemeral. Once a new znode is created as a sequential znode, then ZooKeeper sets the path of the znode by attaching a 10-digit sequence number to the original name. The sequential znode can be easily differentiated from the normal zNode with the help of different suffixes. The zNodes can have public or more restricted access. The access rights can be managed by special ACL permissions.

Sessions

Apache ZooKeeper’s operation relies heavily on sessions. The session will be established and the client will be given a session id (a 64-bit number) when the client connects to the Zookeeper server. A session has a timeout period which is specified in milliseconds. The session might get expired when the connection remains idle for more than the timeout period. The Sessions are kept alive by the client sending a ping request (heartbeat) to the ZooKeeper service. By using a TCP connection, a client maintains the sessions with the Zookeeper server. When a session ends for any reason, the ephemeral znodes created during that session also get deleted. The right session timeout is determined by several factors, including the size of the ZooKeeper ensemble, application logic complexity, and network congestion.

Watches

The client can easily receive notifications about changes to the ZooKeeper ensemble through watches. The clients are able to set watches while reading a specific znode. Any time a znode (on which the client registers) changes, watches notify the registered client. Data associated with the znode or changes in the znode’s children are referred to as znode changes. Watches are only activated once. A client must perform a second read operation if they want a notification again. The client will be disconnected from the server and the associated watches will also be removed when a connection session expires. The watches registered on a znode can be removed with a call to removeWatches. Also, a ZooKeeper client can remove watches locally even if there is no server connection by setting the local flag to true.

Zookeeper Quorum

It refers to the bare minimum of server nodes that must be operational and accessible to client requests. For a transaction to be successful, any client-generated updates to the ZooKeeper tree must be persistently stored in this quorum of nodes. Using the formula Q = 2N+1, where Q is the number of nodes required to form a healthy ensemble and N is the maximum number of failure nodes, quorum specifies the rule for forming a healthy ensemble. The above formula can be considered to decide what is the safest and optimal size of a quorum. The Ensemble can be defined simply as a group of ZooKeeper servers. The minimum number of nodes that are required to form an ensemble is 3. A five-node ZooKeeper ensemble can handle two node failures because a quorum can be established from the remaining three nodes as per the formula Q = 2N+1.

The following entries can be defined as the quorum of Zookeeper servers and must be available in zoo.cfg file located under conf directory.

server.1=zoo1:2888:3888

server.2=zoo2:2888:3888

server.3=zoo3:2888:3888

And they follow the pattern as

server.X=server_name:port1:port2

server.X, where X is the server number in ASCII. Prior to that, we will have to create a file named as myid under the Zookeeper data directory in each Zookeeper server. This file should contain the server number X as an entry in it. server_name is the hostname of the node where the Zookeeper service is started.

port1, the ZooKeeper server uses this port to connect followers to the leader.

port2, this port is used for leader election.

Transactions

Transaction in Apache Zookeeper is atomic and idempotent and involves two steps namely leader election and atomic broadcast. ZooKeeper uses ZooKeeper Atomic Broadcast (ZAB), a unique atomic messaging protocol. Because it is atomic, the ZAB protocol ensures that updates will either succeed or fail.

Local Storage and Snapshots

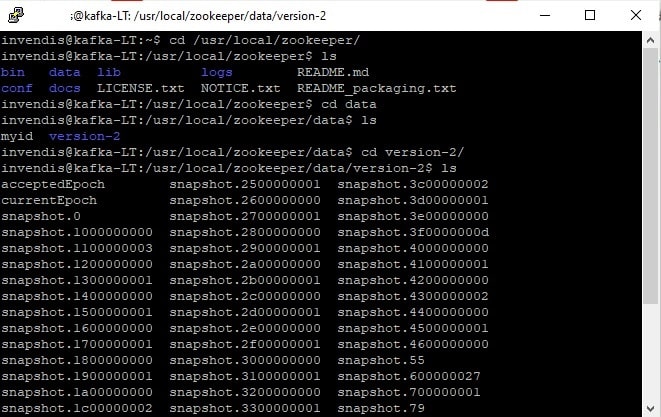

Transactions are stored in local storage on ZooKeeper servers. The ZooKeeper Data Directory contains snapshot and transactional log files which are persistent copy of the znodes stored by an ensemble. The transactions are logged to transaction logs. Any changes to znodes are appended to transaction log and when the log file size increases, a snapshot of the current state of znodes is written to the filesystem. In below image, we can see how snapshots are getting persisted inside Zookeeper’s data directory.

The ZooKeeper tracks a fuzzy state of its own data tree within the snapshot files. Because ZooKeeper transaction logs are written at a rapid rate, it is critical that they be configured on a disk separate from the server’s boot device. In the event of a catastrophic failure or user error, the transactional logs and snapshot files in Apache ZooKeeper make it possible to recover data. Inside zoo.cfg file available under conf directory of Zookeeper server, the data directory is specified by the dataDir parameter and the data log directory is specified by the dataLogDir parameter.

Hope you have enjoyed this read. Please like and share if you feel this composition is valuable.

Written by

Gautam Goswami