Apache Flink – A 4G Data Processing Engine

Analyzing streaming data in large-scale systems is becoming a focal point day by day to take accurate business decisions due to mushrooming of digital data generation sources around the globe including social media. Real-Time analytics are becoming more attractive due to possibilities of getting insights from the time-value of data (in other words, when data is in motion).

Analyzing streaming data in large-scale systems is becoming a focal point day by day to take accurate business decisions due to mushrooming of digital data generation sources around the globe including social media. Real-Time analytics are becoming more attractive due to possibilities of getting insights from the time-value of data (in other words, when data is in motion).

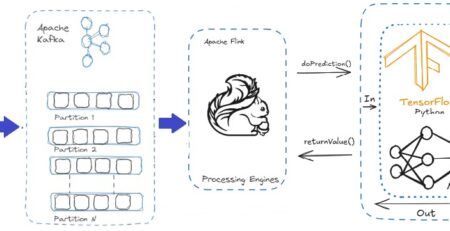

Apache Flink, an open source highly innovative stream processor engine has been grounded which helps to take advantage of stream-based approaches. Besides providing fault-tolerant, actual real-time analytics, it has the capability to analyze historical data and simplify developed data pipeline. Also, Flink is offering batch jobs too. Before understanding why Flink has been designated as 4th generation data processing engine in Big Data world, we need to be familiar with few data stream definitions.

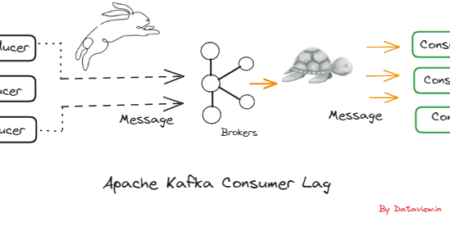

The data element is the smallest element/unit of data which can be processed by the data streaming application as well as can be sent over the network. The data stream can be defined as continuous partitioned and partially ordered stream of data elements those can be potentially infinite. Data stream source is the source of data that continuously produces, possibly infinite amount of data elements and can’t be loaded into the memory. We can visualize the Twitter streaming as an example. Data stream sink is an operator and can be considered as the database where no output data stream, only input data stream. Besides, we can consider Map Reduce programming model where mapper consumes the data element from the input reader, process and subsequently write back to HDFS.

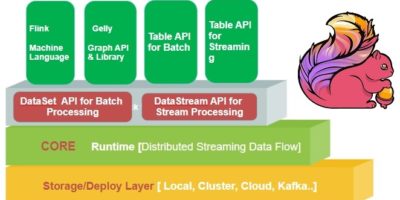

Though Apache Flink does not offer storage mechanism like Hadoop (HDFS) but can be executed/deploy on the local setup like laptop/Desktop with single JVM, Single or multi-node clusters with YARN and cloud like Amazon EC2, Google cloud.

Flink Runtime is the core computational belt of Flink which works in a distributed manner and accept streaming data-flow programs that execute in a fault tolerance approach. Also, works in the multi-node cluster where YARN (Yet Another Resource Negotiator)already installed/configured. The single system is the best option for debugging the Flink applications. On top of the Runtime, Flink provides two sets of API for both stream processing as well as batch processing and has been named as DataStream API and DataSet API.

As soon as we submit the program to the Flink cluster, it compiles and pre-processes the program in order to produce DAG (Directed Acyclic Graph) which will be applied over the data set. Before that Flink’s built-in Optimizer try to transform the program into equivalent one in order to minimize time to result. Ideally, optimizer inspects the DAG and modify to achieve best possible performance before hand over to run-time.

Flink manages its own memory. There won’t be any scope of out of memory exception/issues during the execution of programs because Flink never breaks the JVM (Java Virtual Machine) heap and decrease significantly the overhead of GC (Garbage Collector). It implements custom memory management separate from the JVM memory management system.

Flink internally uses the micro-batching approach while processing the streaming data. Those micro/mini-batches will be stored internally into memory before shuffle phase over the network. From the application developer perspective, we should not be concerned about whether a system implements micro-batching and how. Flink uses special memory data structures for hashing and sorting that can partially spill data from memory to disk when needed and is very efficient disk spilling and network transfer.

Flink has provided many built-in transformation operators, such as map, flatMap, Iterate, Delta Iterate, GroupReduce etc. Yahoo and dataArtisans have suggested after setting multiple benchmarks that Flink having impressive performance with huge amounts of data. Savepoints feature is offering another significant advantage where Flink allows us to stop the cluster, update the code we are running and start it again all without losing data.